Vintra Leads Video Analytics Industry in Bias Reduction

Vintra is excited to announce results from our ongoing efforts to ensure Vintra’s AI platform can equitably recognize and correctly identify faces across different races. Vintra’s work to reduce bias has resulted in cutting the bias gap by more than two-thirds and surpassing the accuracy rates across most racial and ethnic identities of the leading commercially available face recognition algorithms from Microsoft and Amazon, and those of the leading open-source face recognition algorithms like ArcFace.

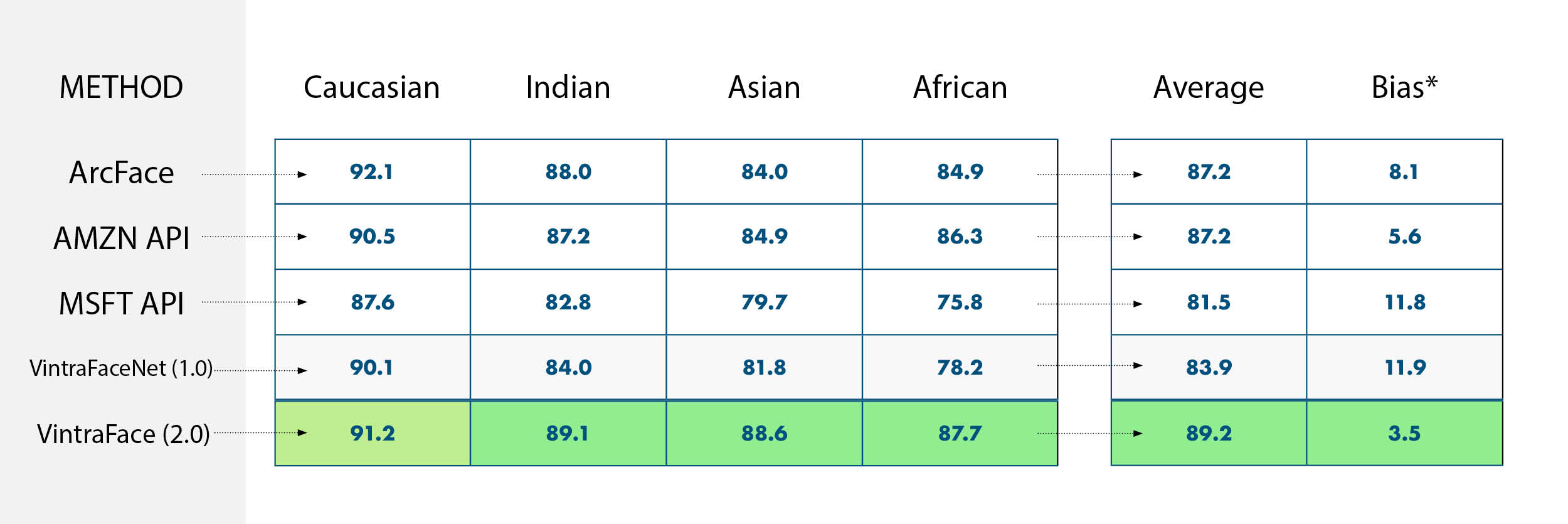

*Bias is defined as the maximum accuracy delta between two classes. Vintra Face 2.0 results as of April 1, 2020.

The analysis above was computed on the well accepted “Racial Faces in the Wild” (RFW) dataset and demonstrates how Vintra has the lowest bias results. The authors of this paper computed results on various ethnicities from Amazon and Microsoft’s face recognition API. The average of those results is provided above as reference.

Vintra CEO, Brent Boekestein, said, “We built our data models from the ground up and didn’t violate privacy policies by building out our training data. We focused on quality data from day one and adhering to our core principles of integrity and trust. Simply put, it matters how we got here, and it shows in the results.”

{{cta(‘80585a18-3d06-4398-a3d3-4c94b79b085c’)}}

The Problem

The public is largely concerned with the face recognition working properly when it is deployed, and Vintra strongly supports that concern. AI developed from machine learning is often based on huge, publicly available data sets that many AI solutions providers use to build their product. Unfortunately, most Western-based facial recognition algorithms misidentify faces that aren’t Caucasian or lighter-skinned for two key reasons: 1. Core data sets are populated by a super majority of white faces; 2. Algorithms have been built and tested on these data sets for years. While algorithmic tweaks have tried to address this in the past, a poor representation of any given ethnicity in a data set cannot be overcome by algorithmic changes alone.

Vintra’s Solution

Vintra has built and curated their own data set, pulling from over 76 countries and tens of thousands of identities with dozens of reference images for each identity in order to better represent Caucasian, African, Asian and Indian ethnicities. This work has resulted in a much fairer balance with each group representing roughly 25% of the total data population and giving AI-powered video analytics a truer picture of what our world really looks like. Ensuring the accuracy of Vintra’s facial recognition results remained in the top 10% of solutions globally when tested on leading datasets like RFW, the team set out to reduce the bias gap – the percentage delta between correctly identifying white faces and all other non-white identities.

Vintra Results

Today, publicly available academic algorithms have an 8% difference in bias between black and white faces, while commercially available algorithms have a 9% difference. Some companies, notably Microsoft, have a 12 percentage point difference when looking at white and black faces.

With initial testing of the new dataset and algorithm, Vintra has closed the racial bias gap to 3.5 when comparing Caucasian and African-descent faces. Vintra’s average accuracy across all non-Caucasian categories bests popular APIs from Amazon, Microsoft, and the leading open-sourced algorithms on the market. We have more work to do but progress is being made.

Vintra is committed to ensuring that facial recognition technology can be developed to equitably recognize all types of faces. The more fair and accurate the results of these solutions are, the more they will be accepted by society as a force for good.

{{cta(‘a1d6315a-fca9-4273-ba46-400fd9e10b68’)}}

Leave a Reply

Want to join the discussion?Feel free to contribute!