Purpose-built for security professionals: faster, more accurate and more flexible – at a lower price point

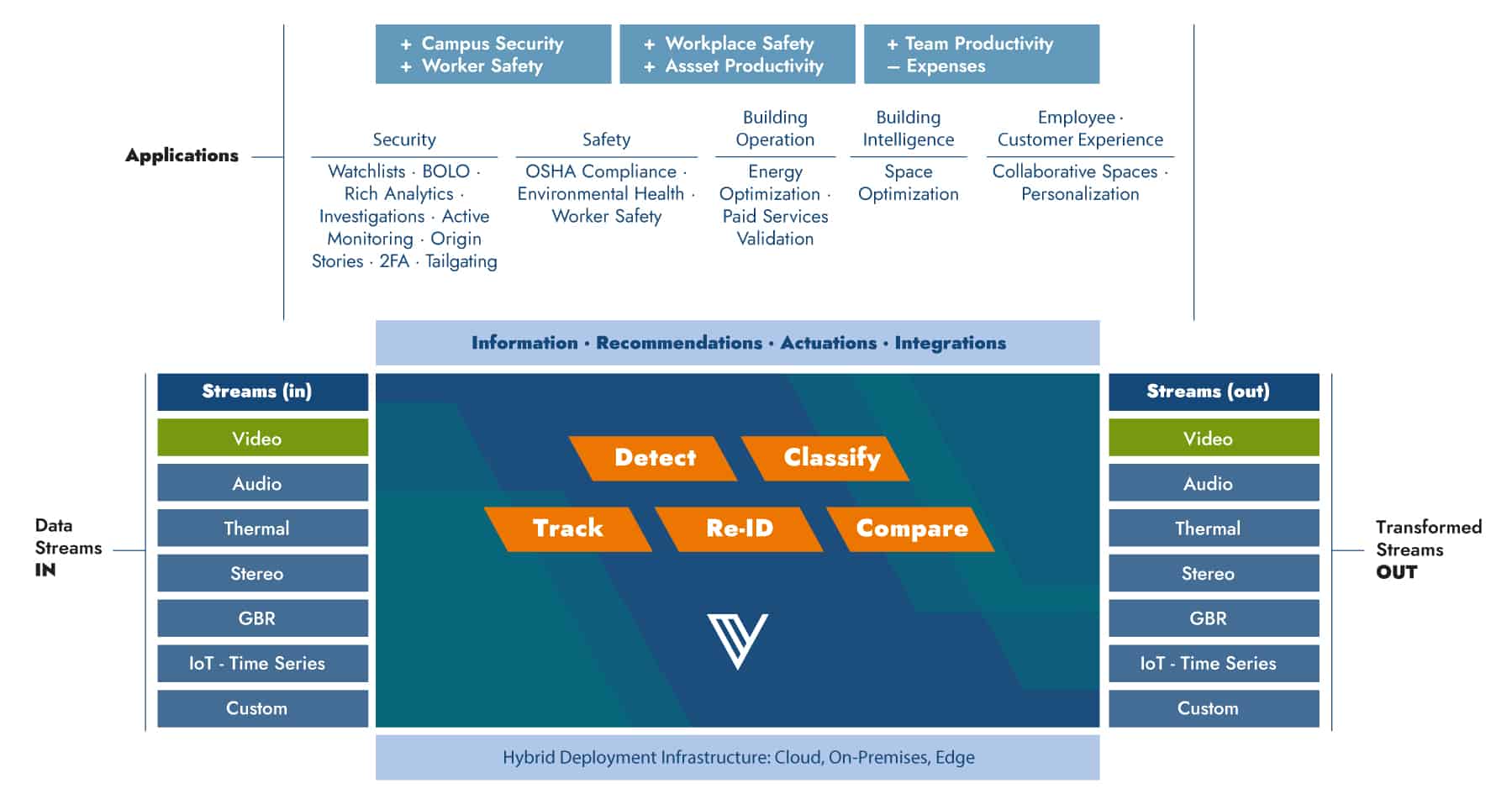

At the heart of these building blocks sits Vintra’s suite of multi-class algorithms for detection, classification, tracking, and re-identification which works with fixed or mobile video – either live or recorded – that automatically finds events that matter so they can be alerted on or quickly searched. This all comes together in our purpose-built, end-to-end solution that is faster, more accurate and more flexible than open-sourced algorithms that underpin many other systems on the market.

At Vintra, our experienced research team has demonstrated the distinct advantages of using purpose-built deep learning technology. This means that an algorithm is imagined, designed, trained and deployed for a single purpose – such as making sense of security camera footage.

Research around the globe has made available a series of open-sourced detectors, which some providers have utilized for their core AI technology in the hopes of expediting their development efforts or minimizing the impact on their research and engineering teams. However, these models were a). created and trained to serve as a general-purpose detector; and b). not designed for a single, end-to-end application.

These two points represent an important limitation for security applications. For mission-critical video analytics in which accuracy and speed are critical, incorporating multiple models that are domain-optimized is of massive importance – and it is a key differentiator in our approach.

As a result, benchmarks consistently show that our models are faster, more accurate, more secure and more flexible (in both how they can be retrained and be deployed) than others that use a purely open-sourced based approach. It’s why 3 of the Fortune 10 trust Vintra with their most demanding video intelligence applications.